A3CP: a shared foundation

The underlying, open platform that handles sensing, classification, clarification, and logging. Everything else, such as prototypes, deployments, and research tools, is built on top of this shared core.

GestureLabs e.V. · In collaboration with The Open University (UK)

GestureLabs is a non-profit organisation developing the Ability-Adaptive Augmentative Communication Platform (A3CP) together with academic, practical, and care partners. A3CP is not a product or a device. It provides a shared architecture for building communication systems that can adapt to how different people express themselves through movement, sound, and everyday interaction, and is based on the principle that systems should adapt to the user rather than requiring people to adapt to the system.

The meaning of embodied signals is often context-dependent and may shift across environments, activities, or over developmental time. A movement or sound can carry different intent at home, at school, or during care routines.

This variability makes consistent interpretation difficult and limits the effectiveness of systems that rely on fixed mappings or static training data.

Some non-speaking individuals communicate through unique combinations of movement, sound, facial expression, and timing. These expressions do not map cleanly onto standardized symbols or fixed vocabularies and often differ substantially from person to person.

While meaningful, such signals typically require personalized interpretation and cannot be assumed to generalize across users, settings, or support staff.

Understanding these signals often depends on close carers who have learned an individual’s communication patterns through long-term exposure and shared experience.

This knowledge is rarely formalized, transferable, or supported by technical systems, leading to breakdowns in understanding when staff change or settings shift.

The underlying, open platform that handles sensing, classification, clarification, and logging. Everything else, such as prototypes, deployments, and research tools, is built on top of this shared core.

Named designs tuned to different user communication needs and contexts. Each variant defines typical hardware setups, clarification strategies, and output channels for a given pattern of abilities.

Per-person configurations deployed in real settings. These start from a variant and are adapted over time as people’s abilities, environments, and goals change.

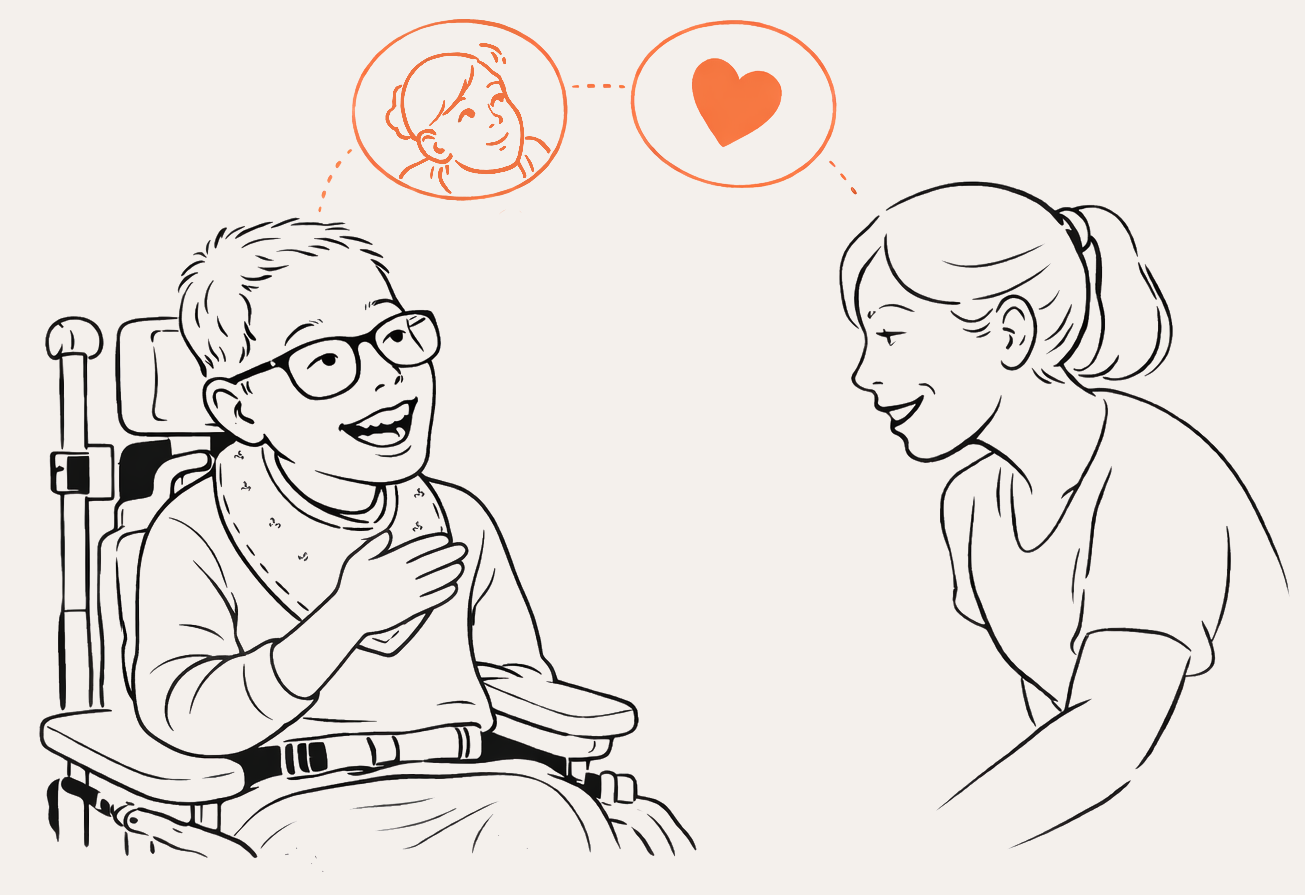

The impetus behind this research project is a deeply personal one. My ten-year-old son, Eric, is bilingual in his verbal understanding, and has boundless happiness and enthusiasm, but he does not (yet) speak. His remarkable determination and ability to communicate through various non-verbal ways inspires and entertains those around him.

Try to imagine how frustrating and daunting it would be to be unable to express yourself freely, to be unable to communicate when you need or want something, and to be severely limited in your ability to interact with others. Being a mother of three children, including Eric – who has cerebral palsy – has allowed me to better understand the needs, challenges, and opportunities of people with limited communication abilities.

Through Eric’s eyes I realised how much we take for granted in life and imagined how technology could be used to enhance communication. There is no “one-size-fits-all” – individuals are unique in their ways of communicating, acting, and living. This uniqueness is even more prominent when there are physical and cognitive limitations.

Driven by the unique and diverse needs of people with disabilities, we are developing a novel personalised communication system that adapts to the abilities of individuals, instead of requiring individuals to learn and adapt to the system. The system will monitor individuals’ unique sounds, motions, and gestures, and translate these into easily understood communication. Combining advances and approaches in the fields of software engineering, human computer interaction, design technologies, and AI, I am excited to be able to contribute to the empowerment of so many people to express their thoughts, desires, and needs.

A3CP is developed by GestureLabs together with research partners at The Open University and external advisors in project development, social innovation, and engineering.

Human Computer Interaction

Leads A3CP architecture, interaction design, and development direction. Co-founder, GestureLabs.

Project Development

Supports project development and organisational planning. Co-founder, GestureLabs.

Development Consultant

Advises on social innovation strategy and implementation pathways. External consultant.

Software Engineering (Open University)

Initiated the project and leads research collaboration and methodology.

Machine Learning Research (Open University)

Develops multimodal recognition models and machine learning pipelines.

Hardware & Prototyping (Open University)

Advises on hardware integration, sensing options, and prototyping.

Governance & roles

GestureLabs e.V. stewards the open platform; research and algorithm development are conducted with independent academic partners.

GestureLabs e.V. is responsible for maintaining the A3CP repository, developing the open-source codebase, and stewarding the platform as a non-profit initiative. The association oversees governance, licensing, documentation, and contribution processes to keep the infrastructure transparent, auditable, and accessible to care organisations, researchers, and developers.

Early work on multimodal models and interaction design was carried out at The Open University (UK), where Andrea Zisman, a professor and initiator of the project, led research and machine learning development. The Open University has funded the project to date and continues to contribute research methodology, evaluation design, and algorithmic work.

Research partners operate independently from platform stewardship. This separation preserves academic autonomy, allows results to be published openly, and makes it possible for additional institutions to collaborate without vendor lock-in or proprietary dependencies.

We are looking for partners in care, research, engineering, and policy who want to help shape ability-adaptive communication technology as open, transparent infrastructure.